Augmented Reality

by Prof. Gudrun Klinker, Ph.D.

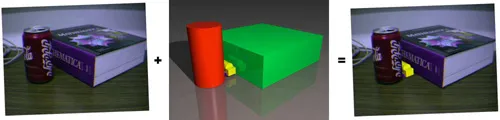

Augmented Reality (AR) is a newly emerging technology by which a user's view of the real world is augmented with additional information from a computer model. With mobile, wearable computers, users can access information without having to leave their work place. They can manipulate and examine real objects and simultaneously receive additional information about them or the task at hand. Using Augmented Reality technology, the information is presented three-dimensionally integrated into the real world. Exploiting people's visual and spatial skills to navigate in a three-dimensional world, Augmented Reality thus constitutes a particularly promising new user interface paradigm.

Research in Augmented Reality and wearable computing is beginning to receive more and more attention due to striking progress in many subfields and fascinating live demonstrations (due to advances in computer miniaturization, mobile networking, and sensing technology). Augmented Reality, by its very nature, is a highly inter-disciplinary field, and Augmented Reality researchers work in areas such as signal processing, computer vision, graphics, user interfaces, wearable computing, mobile computing, computer networks, distributed computing, information access, information visualization, software engineering, and the design of new displays.

Research Issues

Our research focus lies on bringing AR technology into real applications. To this end, we look not only at "classical AR" solutions but consider suitable variations, thereby combining AR with concepts of mobile and ubiquitous computing. This leads to the concept of Ubiquitous Augmented Reality (UAR).

We investigate (not necessarily disjunct) issues with respect to:

- Sensing:

To track users and mobile objects in the real world, suitable sensors need to be registered, calibrated and combined in real-time (i.e.: at video rate). We use, test, evaluate and enhance sensing technology, placing particular emphasis on the establishment of sound characteristics of measurement noise.

- Ubiquitous Tracking (Sensor Fusion):

AR-based tracking strives for high presicion and speed. Location-based tracking support focuses on generality, flexibility, wide range of use, and low cost. We work towards a framework for sensor fusion, that allows for flexible, dynamically configurable combinations of several trackers, using location-based approaches to generalize and backup more brittle and constrained high quality tracking technology.

- 3D Information Presentation:

AR presents computer-based information three-dimensionally embedded into the real surroundings of a user. Visualizations can be shown on head-mounted displays (HMDs), mobile monitors (tablet PCs, PDAs, mobile phones, or intelligent instruments), nearby stationary monitors (desktops, walls), or as direct projections onto real objects. We conceive, investigate and compare such presentation techniques, as well as flexible, context-dependent combinations thereof.

- 3D Interaction:

When computer-based information is embedded within the real environment of users, users can react to such information directly within such real-world context - by manipulating real objects in addition to gesturing, speech, 3D pointing, and "standard" human-computer interaction via so-called "WIMP" (desktop-based) user interfaces. We investigate suitable combinations of such interfaces - with particular emphasis on direct 3D human-computer interaction embedded in the real world (tangible UIs).

- HCI in Cars:

User centered driver assistance with minimalistic interactions for active safety. The focus relies on embedded Augmented Reality and its use for the assistance part of all in-vehicle information systems. To improve driving performance, the driver is intended to reside in the control circuit of the driving task instead of being taken out for gathering information from secondary sources as warning or navigation systems and displays.

- Multitouch Displays:

Recently, interaction devices based on natural gestures have become more and more widespread, e.g. with Jeff Han's work (watch on YouTube), Microsoft Surface or the Apple iPhone. These devices particularly support multi-touch interaction, thereby enabling a single user to work with both hands or even several users in parallel. In this project, we explore the applications of multi-touch surfaces, both large and small. We are building and evaluating new hardware concepts as well as developing software to take advantage of the new interaction modalities.

- System Architectures for Ubiquitous Augmented Reality:

In order to provide ubiquitous tracking, information presentation and interaction facilities, the underlying system architecture needs to be very flexible, adaptive and extensible. We have built an ad-hoc, peer-to-peer framework. We are evaluating and extending and/or simplifying the system. We are also exploring how to base the system on concepts and tools to specify, customize and analyze desired system behavior.

- Industrial Augmented Reality:

We actively pursue intense relationships with industry partners who provide us with information pertaining to the real-world requirements and trade-offs of transferring AR technology into real applications.

Important Links

- Augmented Reality Further Reading? (literature suggestions for further reading)

- The DWARF Annotated Augmented Reality Bibliography

Links to related research:

- http://www.csl.sony.co.jp/projects/ar/ref.html

- http://www.se.rit.edu/~jrv/research/ar/

- http://www.media.mit.edu/wearables/lizzy/wearlinks.html

- http://www.research.microsoft.com/ierp/

- http://www.cs.cmu.edu/~cil/vision.html

- http://mambo.ucsc.edu/psl/cg.html

Conferences & Journals

Journals

- Communications of the ACM, Special issue on computer augmented environments: back to the real world, Vol. 36, No. 7, July 1993.

- Presence, Presence, special Issue on Augmented Reality, Vol 6, No. 4, August 1997.

ISMAR Series

- 1. International Workshop on Augmented Reality (IWAR'98), San Francisco, Nov. 1998.

- 2. International Workshop on Augmented Reality (IWAR'99), San Francisco, Oct. 1999.

- 1. International Symposium on Mixed Reality (ISMR'99), Yokohama, Japan, March 1999.

- 1. International Symposium on Augmented Reality (ISAR 2000), Munich, Oct. 2000.

- 2. International Symposium on Mixed Reality (ISMR'01), Yokohama, Japan, March 2001.

- 2. International Symposium on Augmented Reality (ISAR 2001), New York, Oct. 2001.

- 1. International Symposium on Mixed and Augmented Reality (ISMAR 2002), Darmstadt, Oct. 2002.

- 2. International Symposium on Mixed and Augmented Reality (ISMAR 2003), Tokyo, Oct. 2003.

- 3. International Symposium on Mixed and Augmented Reality (ISMAR 2004), Arlington, VA, Nov. 2004.

- 4. International Symposium on Mixed and Augmented Reality (ISMAR 2005), Vienna, Oct. 2005.

- 5. International Symposium on Mixed and Augmented Reality (ISMAR 2006) Santa Barbara, Oct. 2006.

- 6. International Symposium on Mixed and Augmented Reality (ISMAR 2007) Nara, Japan, Nov. 2007.

ISWC Series

- 1. International Symposium on Wearable Computers ( ISWC'97 ), Oct 1997.

- 2. International Symposium on Wearable Computers ( ISWC'98 ), Oct. 1998.

- 3. International Symposium on Wearable Computers ( ISWC'99 ), Oct. 1999.

- 4. International Symposium on Wearable Computers ( ISWC'00 ), Atlanta, Oct. 2000.

- 5. International Symposium on Wearable Computers ( ISWC'01 ), Zürich, Oct. 2001.

- 6. International Symposium on Wearable Computers ( ISWC'02 ), Seattle, Oct. 2002.

- 7. International Symposium on Wearable Computers ( ISWC'03 ), White Plains (NY), Oct. 2003.

Ubicomp Series

- 1. International Symposium on Handheld and Ubiquitous Computing ( HUC'99 ),Karlsruhe, Sept. 1999.

- 2. International Symposium on Handheld and Ubiquitous Computing ( huc2k ), Bristol, Sept. 2000.

- 3. International Conference on Ubiquitous Computing ( Ubicomp 01 ), Atlanta, Sept. 2001.

- 4. International Conference on Ubiquitous Computing ( Ubicomp 2002 ), Göteborg, Sept. 2002.

- 5. International Conference on Ubiquitous Computing ( Ubicomp 2003 ), Seattle, Sept. 2003.

Associated Chairs (TU Munich, Computer Science)

- TU München, Informatik, Lehrstuhl für Informatikanwendungen in der Medizin (CAMP-AR) (Prof. Navab) (since 2005)

- TU München, Informatik, Lehrstuhl für Applied Angewandte Softwaretechnik (Prof. Brügge)

Collaborating Researchers and Research Groups

- TU München, Fakultät für Maschinenwesen,

- Lehrstuhl für Ergonomie (LfE)) (Prof. Klaus-Jürgen Bengler, Prof. Heiner Bubb)

- Lehrstuhl für Fördertechnik Materialfluss Logistik (FML) (Prof. Willibald A. Günthner)

- Lehrstuhl für Umformtechnik und Gießereiwesen (UTG) (Prof. Hartmut Hoffmann)

- Lehrstuhl für Automatisierung und Informationssysteme (AIS) (Prof. Birgit Vogel-Heuser)

- TU München, Fakultät für Chemie, Fachgebiet Molekulare Katalyse (Prof. Fritz Kühn)

- TU München, Feuerwehr (Thomas Schmidt)

- TU Graz, Institute for Computer Graphics and Vision (ICG): Studierstube (Prof. Dieter Schmalstieg)

- University of Barcelona, EVENT Lab for Neuroscience and Technology (Mel Slater)

- Bauhaus Universität Weimar, now Johannes Kepler Universität Linz, Prof. Oliver Bimber