Ewald-based Long-Range Message Passing for Molecular Graphs

by Arthur Kosmala, Johannes Gasteiger, Nicholas Gao and Stephan Günnemann

Published at the 40th International Conference on Machine Learning (ICML), 2023

Abstract

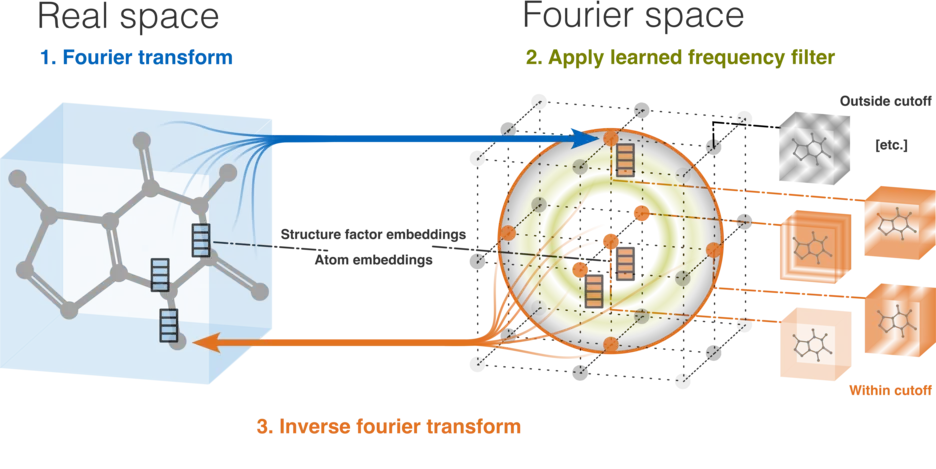

Neural architectures that learn potential energy surfaces from molecular data have undergone fast improvement in recent years. A key driver of this success is the Message Passing Neural Network (MPNN) paradigm. Its favorable scaling with system size partly relies upon a spatial distance limit on messages. While this focus on locality is a useful inductive bias, it also impedes the learning of long-range interactions such as electrostatics and van der Waals forces. To address this drawback, we propose Ewald message passing: a nonlocal Fourier space scheme which limits interactions via a cutoff on frequency instead of distance, and is theoretically well-founded in the Ewald summation method. It can serve as an augmentation on top of existing MPNN architectures as it is computationally cheap and agnostic to other architectural details. We test the approach with four baseline models and two datasets containing diverse periodic (OC20) and aperiodic structures (OE62). We observe robust improvements in energy mean absolute errors across all models and datasets, averaging 10% on OC20 and 16% on OE62. Our analysis shows an outsize impact of these improvements on structures with high long-range contributions to the ground truth energy.

Cite

Please cite our paper if you use the model, experimental results, or our code in your own work:

@inproceedings{kosmala_ewald_2023,

title = {Ewald-based Long-Range Message Passing for Molecular Graphs},

author = {Kosmala, Arthur and Gasteiger, Johannes and Gao, Nicholas and G{\"u}nnemann, Stephan},

booktitle={International Conference on Machine Learning (ICML)},

year = {2023}

}