Prior2Former - Evidential Modeling of Mask Transformers for Assumption-Free Open-World Panoptic Segmentation

by Sebastian Schmidt, Julius Körner, Dominik Fuchsgruber, Stephano Gasperini, Frederico Tombari and Stephan Günnemann.

IEEE International Conference on Computer Vision (ICCV), 2025, (Highlight).

[Arvix | ICCV | ICCV virtual Event | Code | Video]

Abstract:

In panoptic segmentation, individual instances must be separated within semantic classes.

As state-of-the-art methods rely on a pre-defined set of classes, they struggle with novel categories and out-of-distribution (OOD) data. This is particularly problematic in safety-critical applications, such as autonomous driving, where reliability in unseen scenarios is essential.

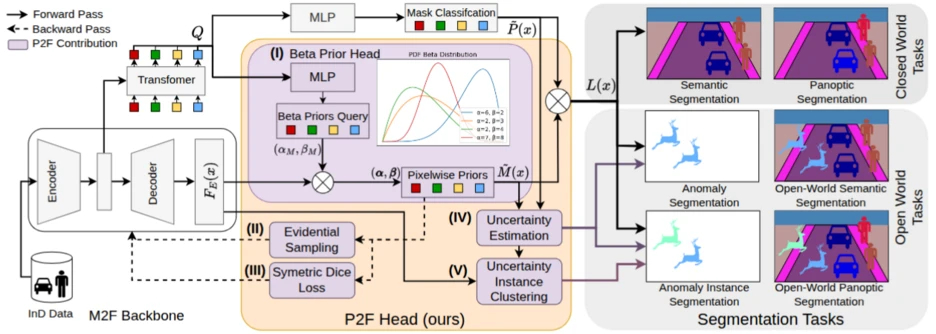

We address the gap between outstanding benchmark performance and reliability by proposing Prior2Former

(P2F), the first approach for segmentation vision transformers rooted in evidential learning. P2F extends the mask vision transformer architecture by incorporating a Beta prior for computing model uncertainty in pixel-wise binary mask assignments. This design enables high-quality uncertainty estimation that effectively detects novel and OOD objects, enabling state-of-the-art anomaly instance segmentation and open-world panoptic segmentation.

Unlike most segmentation models addressing unknown classes, P2F operates without access to OOD data samples or contrastive training on void (i.e., unlabeled) classes, making it highly applicable in real-world scenarios where such prior information is unavailable.

Additionally, P2F can be flexibly applied to anomaly instance and panoptic segmentation.

Through comprehensive experiments on the Cityscapes, COCO, SegmentMeIfYouCan, and OoDIS datasets, P2F demonstrates state-of-the-art performance across the board.

Modern AI vision systems excel at recognizing known objects but fail when faced with the unknown. This limitation is critical in safety-focused domains like autonomous driving, robotics, or medical imaging. Prior2Former (P2F) bridges this gap by extending mask vision transformers with evidential learning, providing accurate predictions and reliable uncertainty estimates — without needing out-of-distribution (OOD) data or external models.

What is P2F?

Prior2Former (P2F) is the first evidential mask transformer designed for assumption-free open-world panoptic segmentation. It not only segments known objects with precision but also detects novel and unseen categories by modeling uncertainty in each pixel.

Key Innovations:

- 📊 Evidential Modeling: Uses Beta priors to quantify model confidence and uncertainty.

- 🖼️ Universal Segmentation: Handles closed-world and open-world tasks — including anomaly segmentation and instance-level unknown detection — with one unified architecture.

- 🚀 Assumption-Free: No reliance on OOD data, void classes, or external foundation models.

- 🏆 State-of-the-Art Results: Proven superior performance across benchmarks like Cityscapes, COCO, SegmentMeIfYouCan, and OoDIS.

Why It Matters

Traditional segmentation models struggle outside their training data, which makes them unreliable in real-world scenarios. P2F changes that by:

- Enhancing safety in autonomous driving and robotics.

- Enabling trustworthy AI through uncertainty-aware predictions.

- Offering a scalable solution that works even when no prior information about anomalies is available.

⚙️ The 5 Technical Pillars of Prior2Former

- Beta-Prior Head

Instead of directly predicting binary masks with sigmoids, P2F predicts the concentration parameters (α, β) of a Beta distribution per pixel–mask assignment. These parameters serve as evidence for positive and negative classes, enabling principled uncertainty quantification. - Evidential Sampling

During training, not all pixels are equally informative. P2F prioritizes pixels with high evidential uncertainty when computing the loss. This uncertainty-aware sampling accelerates training and ensures the model learns more from ambiguous regions. - Symmetric Dice Loss

Standard Dice loss often biases toward the negative class (background). P2F introduces a symmetric variant that balances updates for α and β, preventing dominance by one side of the distribution and leading to sharper, more reliable mask predictions. - Uncertainty Estimation

At inference, P2F combines mask evidence (from the Beta prior) with class confidence to produce pixel-level uncertainty scores. This enables robust anomaly detection without needing any explicit out-of-distribution (OOD) data during training. - Uncertainty Instance Clustering

High-uncertainty pixels are clustered (using DBSCAN in embedding space) into coherent object instances. This step allows P2F to separate novel or anomalous objects into distinct segments — a key capability for open-world panoptic segmentation.