Model Collapse Is Not a Bug but a Feature in Machine Unlearning for LLMs

This page links to additional material for our ICLR 2026 paper:

Model Collapse Is Not a Bug but a Feature in Machine Unlearning for LLMs

Yan Scholten, Sophie Xhonneux, Leo Schwinn*, Stephan Günnemann*

International Conference on Learning Representations, ICLR 2026

Links

Abstract

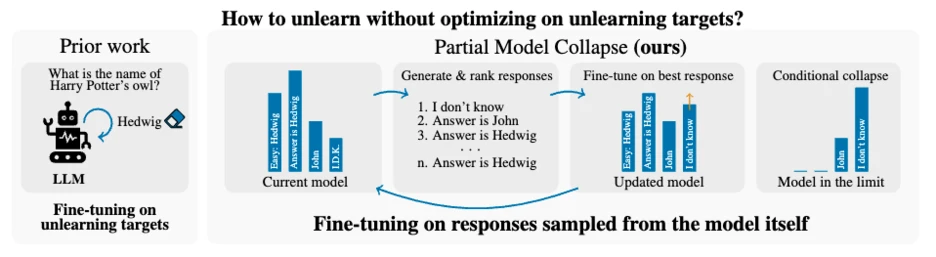

Current unlearning methods for LLMs optimize on the private information they seek to remove by incorporating it into their fine-tuning data. We argue this not only risks reinforcing exposure to sensitive data, it also fundamentally contradicts the principle of minimizing its use. As a remedy, we propose a novel unlearning method - Partial Model Collapse (PMC), which does not require unlearning targets in the unlearning objective. Our approach is inspired by recent observations that training generative models on their own generations leads to distribution collapse, effectively removing information from model outputs. Our central insight is that model collapse can be leveraged for machine unlearning by deliberately triggering it for data we aim to remove. We theoretically analyze that our approach converges to the desired outcome, i.e. the model unlearns the data targeted for removal. We empirically demonstrate that PMC overcomes three key limitations of existing unlearning methods that explicitly optimize on unlearning targets, and more effectively removes private information from model outputs while preserving general model utility. Overall, our contributions represent an important step toward more comprehensive unlearning that aligns with real-world privacy constraints.

Cite

@misc{scholten2025modelcollapse,

title={Model Collapse Is Not a Bug but a Feature in Machine Unlearning for LLMs},

author={Yan Scholten and Sophie Xhonneux and Leo Schwinn and Stephan G{\"u}nnemann},

year={2025},

eprint={2507.04219},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2507.04219},

}