What Expressivity Theory Misses: Message Passing Complexity for GNNs

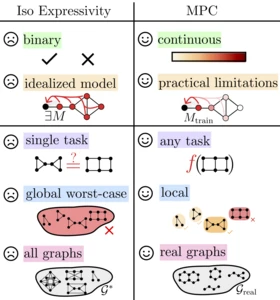

vs. benefits of MPC.

Graph Neural Networks (GNNs) are typically analyzed through expressivity theory by characterizing their ability to distinguish non-isomorphic graphs. This framework has driven extensive research toward developing more expressive architectures, under the assumption that higher expressivity translates to better empirical performance. But what if this focus is misguided? We challenge this prevailing paradigm by showing that expressivity theory relies on unrealistic assumptions and provides only binary characterizations that miss practical limitations like over-squashing and under-reaching. To narrow this theory-practice gap, we introduce Message Passing Complexity (MPC): a continuous measure that quantifies how difficult it is for information to propagate through graph structures to solve specific tasks. Using fundamental graph tasks as testbeds, we demonstrate that MPC's predictions correlate strongly with empirical performance, revealing that success often depends not on maximizing expressivity but on minimizing task-specific complexity through appropriate architectural choices.

Links

Citation

If you use this work in your research, please cite this paper:

@inproceedings{Kemper2025Complexity,

title={What Expressivity Theory Misses: Message Passing Complexity for GNNs},

author={Kemper, Niklas and Wollschl{\"a}ger, Tom and G{\"u}nnemann, Stephan},

booktitle={Neural Information Processing Systems (NeurIPS)},

year={2025},

url={https://arxiv.org/abs/2509.01254} }