Localized Randomized Smoothing

This page links to additional material for our paper

Localized Randomized Smoothing for Collective Robustness Certification

by Jan Schuchardt*, Tom Wollschläger*, Aleksandar Bojchevski and Stephan Günnemann

International Conference on Learning Representations (ICLR) 2023

(Selected for a spotlight presentation)

Links

Abstract

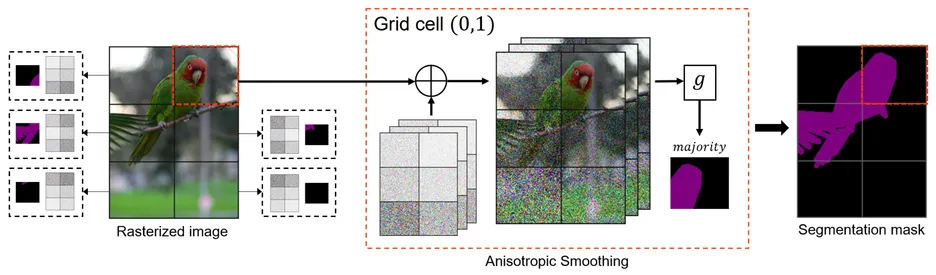

Models for image segmentation, node classification and many other tasks map a single input to multiple labels. By perturbing this single shared input (e.g. the image) an adversary can manipulate several predictions (e.g. misclassify several pixels). A recent collective robustness certificate provides strong guarantees on the number of predictions that are simultaneously robust. This method is however limited to strictily models, where each prediction is associated with a small receptive field. We propose a more general collective certificate for the larger class of softly local models, where each output is dependent on the entire input but assigns different levels of importance to different input regions (e.g. based on their proximity in the image). The certificate is based on our novel localized randomized smoothing approach, where the random perturbation strength for different input regions is proportional to their importance for the outputs. The resulting locally smoothed model yields strong collective guarantees while maintaining high prediction quality on both image segmentation and node classification tasks.

Cite

Please cite our paper if you use the method in your own work:

@InProceedings{Schuchardt2023_Localized,Localized Randomized Smoothing for Collective Robustness Certification

author = {Schuchardt, Jan and Wollschl{\"a}ger, Tom and Bojchevski, Aleksandar and G{\"u}nnemann, Stephan},

title = {},International Conference on Learning Representations (ICLR)

booktitle = {},

year = {2023}

}