Invariance-Aware Randomized Smoothing Certificates

This page links to additional material for our paper

Invariance-Aware Randomized Smoothing Certificates

by Jan Schuchardt and Stephan Günnemann

Published at Neural Information Processing Systems (NeurIPS) 2022

Links

Abstract

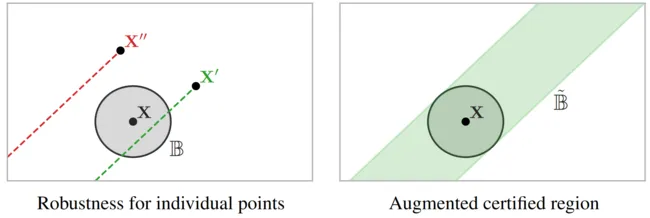

Building models that comply with the invariances inherent to different domains, such as invariance under translation or rotation, is a key aspect of applying machine learning to real world problems like molecular property prediction, medical imaging, protein folding or LiDAR classification. For the first time, we study how the invariances of a model can be leveraged to provably guarantee the robustness of its predictions. We propose a gray-box approach, enhancing the powerful black-box randomized smoothing technique with white-box knowledge about invariances. First, we develop gray-box certificates based on group orbits, which can be applied to arbitrary models with invariance under permutation and Euclidean isometries. Then, we derive provably tight gray-box certificates. We experimentally demonstrate that the provably tight certificates can offer much stronger guarantees, but that in practical scenarios the orbit-based method is a good approximation.

Cite

Please cite our paper if you use the method in your own work:

@InProceedings{Schuchardt2022_Invariance,Conference on Neural Information Processing Systems (NeurIPS)

author = {Schuchardt, Jan and G{\"u}nnemann, Stephan},

title = {Invariance-Aware Randomized Smoothing Certificates},

booktitle = {},

year = {2022}

}