Collective Robustness Certificates: Exploiting Interdependence in Graph Neural Networks

This page links to additional material for our paper

Collective Robustness Certificates: Exploiting Interdependence in Graph Neural Networks

by Jan Schuchardt, Aleksandar Bojchevski, Johannes Gasteiger and Stephan Günnemann

Published at the International Conference on Learning Representations (ICLR) 2021

Links

[Paper | GitHub | Poster | Slides ]

Abstract

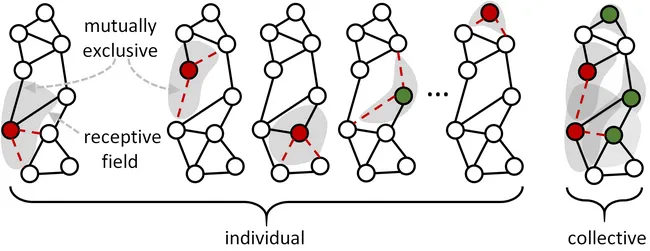

In tasks like node classification, image segmentation, and named-entity recognition we have a classifier that simultaneously outputs multiple predictions (a vector of labels) based on a single input, i.e. a single graph, image, or document respectively. Existing adversarial robustness certificates consider each prediction independently and are thus overly pessimistic for such tasks. They implicitly assume that an adversary can use different perturbed inputs to attack different predictions, ignoring the fact that we have a single shared input. We propose the first collective robustness certificate which computes the number of predictions that are simultaneously guaranteed to remain stable under perturbation, i.e. cannot be attacked. We focus on Graph Neural Networks and leverage their locality property - perturbations only affect the predictions in a close neighborhood - to fuse multiple single-node certificates into a drastically stronger collective certificate.

Cite

Please cite our paper if you use the method in your own work:

@InProceedings{Schuchardt2021_Collective,

author = {Schuchardt, Jan and Gasteiger, Johannes and Bojchevski, Aleksandar and G{\"u}nnemann, Stephan},

title = {Collective Robustness Certificates: Exploiting Interdependence in Graph Neural Networks},

booktitle = {International Conference on Learning Representations},

year = {2021}

}