- Revisiting Inter-Class Maintainability Indicators. 30th IEEE International Conference on Software Analysis, Evolution and Reengineering (SANER), IEEE, 2023, 805–814 more…

- A Preliminary Study on Using Text- and Image-Based Machine Learning to Predict Software Maintainability. In: Software Quality: The Next Big Thing in Software Engineering and Quality. Springer International Publishing, 2022 more…

- Efficient Platform Migration of a Mainframe Legacy System Using Custom Transpilation. 2021 IEEE International Conference on Software Maintenance and Evolution (ICSME), IEEE, 2021 more…

- Human-level Ordinal Maintainability Prediction Based on Static Code Metrics. Evaluation and Assessment in Software Engineering, ACM, 2021 more…

- Defining a Software Maintainability Dataset: Collecting, Aggregating and Analysing Expert Evaluations of Software Maintainability. 2020 IEEE International Conference on Software Maintenance and Evolution (ICSME), IEEE, 2020 more…

- A Software Maintainability Dataset. 2020 more…

- Learning a Classifier for Prediction of Maintainability Based on Static Analysis Tools. 2019 IEEE/ACM 27th International Conference on Program Comprehension (ICPC), IEEE, 2019 more…

- Software quality assessment in practice. Proceedings of the 12th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement - ESEM '18, ACM Press, 2018 more…

Machine Learning Assisted Software Maintainability Assessments

Project Description

This research project strengthens the idea that machine-learned models, particularly models based on static code metrics, can contribute towards automated software maintainability assessments. To ensure the long-term viability of a software system, it is essential to control its maintainability. Though more reliable and precise than tool-based assessments, evaluations by experts are time-consuming and expensive. One way to combine the advantages of fast tools and precise experts is using Machine Learning (ML) to classify maintainability as experts would perceive it. The goal of this project is to close the gap between expert-based assessments and machine-learned classifications. Hence, we provide a structured model of contemporary expert-based assessments and investigate how this process can be supported with ML techniques.

Project Duration: 2018-2022

Contributions and Results

This research contributes several assets to the state of the art:

- Our structured evaluation model sheds light on how industrial maintainability assessments are performed by experts at an industrial partner.

- As a basis for our ML-driven research, we contribute a manually labeled software maintainability dataset. Here, we selected a representative sample from nine software projects and surveyed 70 professionals affiliated with 17 institutions. The resulting dataset contains the consensus of the experts for a total of 519 code files.

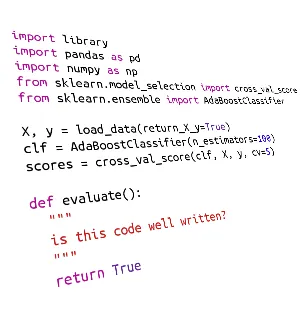

- Using this dataset, we compare several ML classification algorithms and investigate different ways to represent the source code as input to these models.

- Eventually, the best-performing classifiers are consolidated into a prototypical tool that combines the necessary data preparation, inference of the maintainability ratings, and a web-based frontend.

In summary, this study shows that ML, especially metric-based classification models, contributes to efficient maintainability assessments by identifying hard-to-maintain code with high precision. However, despite the good performance, we conclude the final interpretation of the findings should remain in the hands of a human analyst.

A Software Maintainability Dataset

Before controlling the maintainability of software systems, we need to assess them. Current automatic approaches have received criticism because their results often do not reflect the opinion of experts or are biased towards a small group of experts, or use problematic surrogate measures for software maintainability. In contrast, we refer to the consensus judgment of at least three study participants per code file. In summary, 70 professionals assessed code from 9 open and closed-source Java projects with a combined size of 1.4 million source lines of code. The assessment covers an overall judgment as well as an assessment of several subdimensions of maintainability. The resulting dataset contains the consensus of the experts for 519 Java classes. Interestingly, our analysis revealed that disagreement between evaluators occurs frequently. This emphasizes the need to refer to the consensus of several experts. Also, the average deviation of an expert from the consensus allows for an illustrative human-level performance baseline to evaluate the trained ML models.

For more details about the creation of this dataset, please refer to: M. Schnappinger, A. Fietzke, and A. Pretschner, "Defining a Software Maintainability Dataset: Collecting, Aggregating and Analysing Expert Evaluations of Software Maintainability", International Conference on Software Maintenance and Evolution (ICSME), 2020

The dataset, i.e. code plus labels and instructions on how to use the data, is available from here

Related Publications

- On Machine Learning Assisted Software Maintainability Assessments. Dissertation, 2023 more…