Object Alignment in Mixed Reality

Abstract

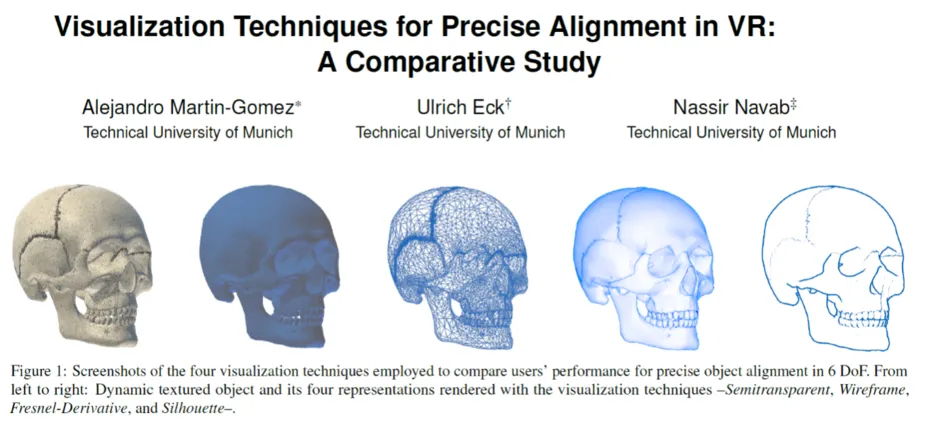

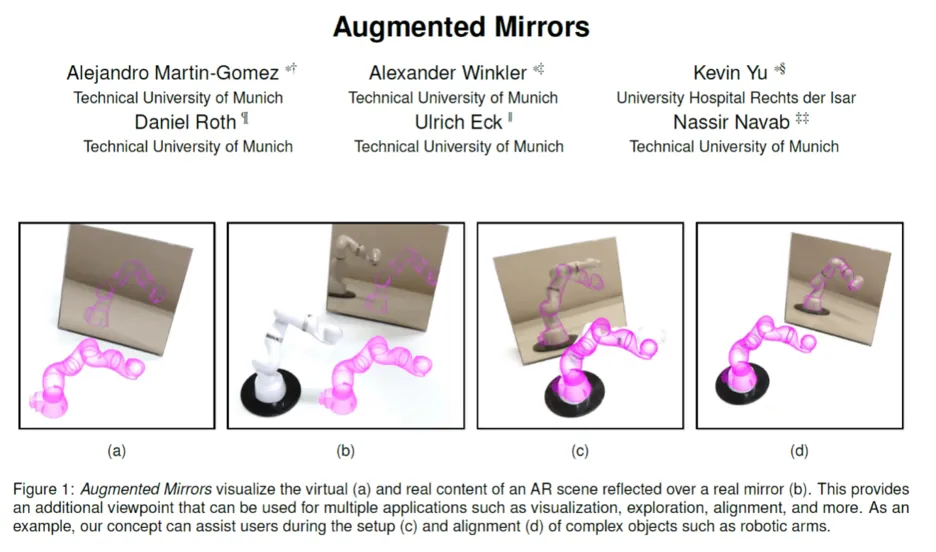

Implicit guidance approaches assist users during the alignment of real and virtual objects in Mixed Reality applications without the need of tracking the objects. These methods present virtual replicas of the real objects to indicate a desired alignment pose. In this scenario, the alignment accuracy strongly depends on the user’s performance and the quality of the information provided.

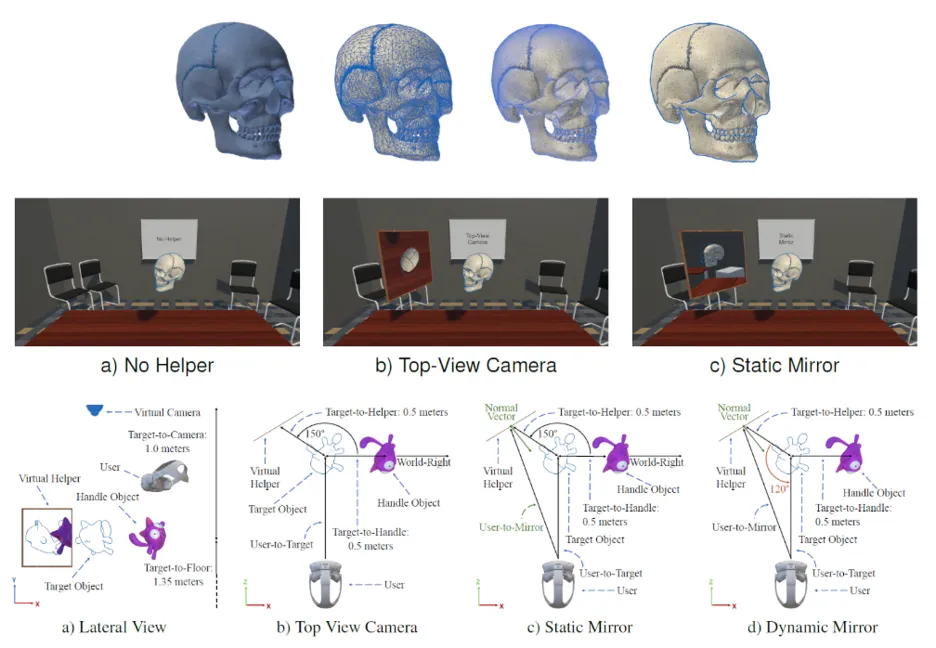

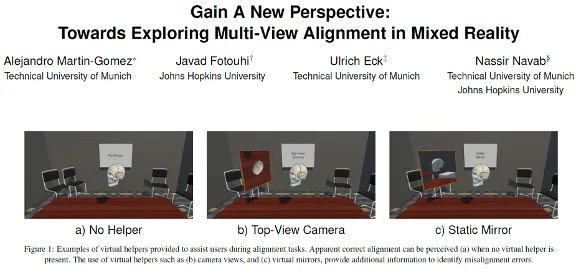

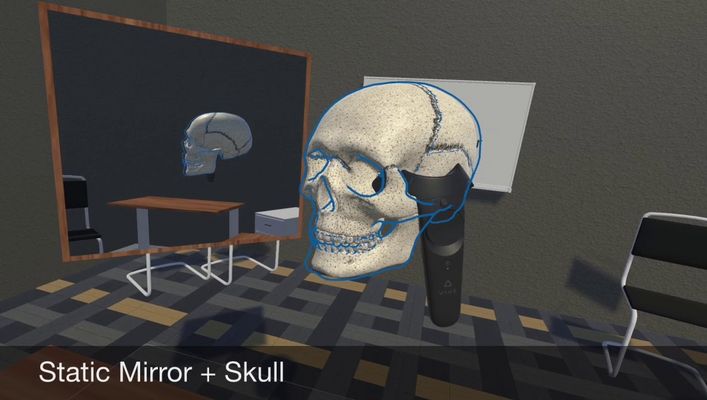

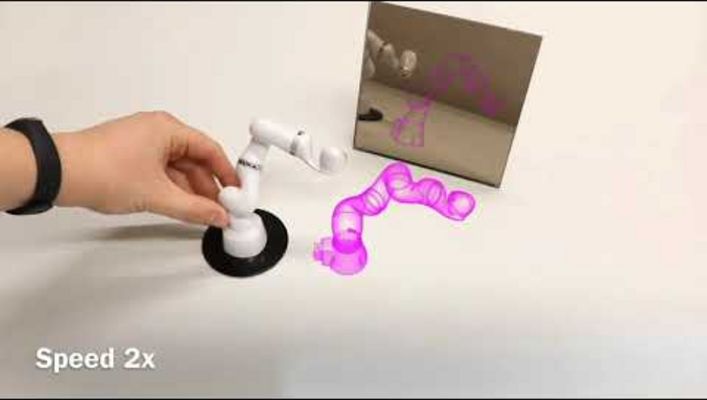

This project explores different approaches to provide implicit-guidance and assist users during alignment tasks. Exemplary methods involve the reduction of the occlusion observed when the objects overlap or the use of multiple viewpoints such as mirrors and cameras during task performance.

Keywords: Mixed Reality, Augmented Reality, Visualization, Perception, Object Alignment

Research Partner

Javad Fotouhi (javad.fotouhi(at)jhu.edu)