Severin Engelmann, Ph.D.

Starting September 1, 2023, Severin works as a postdoctoral fellow at Cornell Tech in New York: https://www.dli.tech.cornell.edu/members/Engelmann

Email: se373@cornell.edu

He continues to be affiliated with the Professorship of Cyber Trust as a guest researcher. Please note that he cannot accept any supervision tasks for bachelor or master theses.

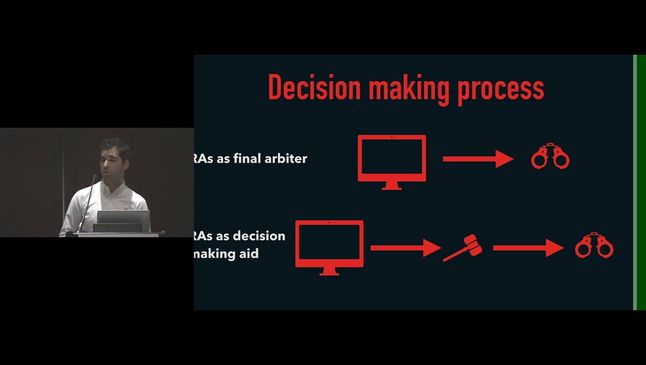

With a background in philosophy of technology and computer science, Severin is an ethicist focusing on the ethics of digital platforms and systems. His research explores the transparency of digitalized reputation mechanisms in the Chinese Social Credit System and examines the feasibility of participatory governance of commercial social media platforms. Currently, he studies how non-experts in AI ethically evaluate AI inference-making across computer vision decision-making scenarios. In this research project, he also investigates whether and to what extent participatory approaches to AI ethics help advance the ethical governance of algorithmic systems. Severin applies a multidisciplinary research methodology. This includes conceptual and theoretical work, data-driven policy analyses, as well as experimental vignette studies.

Visiting Positions:

Between January and May 2022, Severin was a visiting scholar at the School of Information at UC Berkeley, USA. His hosting professor was Prof. Deirdre Mulligan.

Between April and August 2021, Severin was a visiting scholar at Prof. Anna Baumert's group Moral Courage at the Max Planck Institute for Research on Collective Goods in Bonn, Germany.

Research interests:

Participatory & experimental approaches to AI ethics, ethics of classification systems, the Chinese Social Credit System.

E-mail: engelmas@in.tum.de | severin.engelmann@tum.de

Twitter: https://twitter.com/SeverinEngelma1

Doctoral Dissertation

Engelmann, S. (2023) Understanding the Legitimacy of Digital Socio-Technical Classification Systems, Technical University of Munich.

Publications

- Engelmann, S., Choksi, M., Wang, A., Fiesler, C. (2024) Visions of a Discipline: Analyzing Introductory AI Courses on YouTube. Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT), pp. 2400-2420. Acceptance rate = 24.1% (725 submissions, 175 accepted full papers) Publisher Version (Open Access) Author Version

- Ullstein, C., Engelmann, S., Papakyriakopoulos, O., Ikkatai, Y., Arnez Jordan, N., Caleno, R., Mboya, B., Higuma, S., Hartwig, T., Yokoyama, H., & Grossklags, J. (2024) Attitudes Toward Facial Analysis AI: A Cross-National Study Comparing Argentina, Kenya, Japan, and the USA. Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT), pp. 2273-2301. Acceptance rate = 24.1% (725 submissions, 175 accepted full papers) Publisher Version (Open Access) Author Version

- Ullstein, C., Engelmann, S., Papakyriakopoulos, O., & Grossklags, J. (2023) A Reflection on How Cross-Cultural Perspectives on the Ethics of Facial Analysis AI Can Inform EU Policymaking. Proceedings of the 2nd European Workshop on Algorithmic Fairness (EWAF). Publisher Version (Open Access)

- Papakyriakopoulos, O., Engelmann, S., Winecoff, A. (2023) Upvotes? Downvotes? No Votes? Understanding the Relationship Between Reaction Mechanisms and Political Discourse on Reddit. Proceedings of the ACM on Human-Computer Interaction (ACM CHI), Article No. 549. Author Version Publisher Version

- Chen, M., Engelmann, S., & Grossklags, J. (2023) Social Credit System and Privacy. In: Trepte, S., & Masur, P. (Eds.) The Routledge Handbook of Privacy and Social Media, pp. 227-236. Routledge. Publisher Version (Open Access)

- Ullstein, C., Engelmann, S., Papakyriakopoulos, O., Hohendanner, M., & Grossklags, J. (2022) AI-competent Individuals and Laypeople Tend to Oppose Facial Analysis AI. Proceedings of the Second ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization (ACM EAAMO), Article No. 9. Author Version Publisher Version (Open Access)

- Engelmann, S., Scheibe, V., Battaglia, F., & Grossklags, J. (2022) Social Media Profiling Continues to Partake in the Development of Formalistic Self-concepts. Social Media Users Think So, Too. Proceedings of the 5th AAAI/ACM Conference on AI, Ethics, and Society (AAAI/ACM AIES), 238–252. Author Version Publisher Version (Open Access)

- Chen, M., Engelmann, S., & Grossklags, J. (2022) Ordinary People as Moral Heroes and Foes: Digital Role Model Narratives Propagate Social Norms in China's Social Credit System. Proceedings of the 5th AAAI/ACM Conference on AI, Ethics, and Society (AAAI/ACM AIES), 181–191. Publisher Version (Open Access)

- Engelmann, S., Ullstein, C., Papakyriakopoulos, O., & Grossklags, J. (2022) What People Think AI Should Infer from Faces. Proceedings of the ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT), pp. 128–141. Author Version Appendix Publisher Version (Open Access)

- Cypris, N., Engelmann, S., Sasse, J., Grossklags, J., & Baumert, A. (2022) Intervening Against Online Hate Speech: A Case for Automated Counterspeech. IEAI Research Brief, Technical University of Munich.

- Engelmann, S., Chen, M., Dang, L., & Grossklags, J. (2021) Blacklists and Redlists in the Chinese Social Credit System: Diversity, Flexibility, and Comprehensiveness. Proceedings of the 4th AAAI/ACM Conference on AI, Ethics, and Society (AAAI/ACM AIES), pp. 78–88. Full paper; oral presentation. Author Version Publisher Version (Open Access)

- Engelmann, S., Grossklags, J., & Herzog, L. (2020) Should Users Participate in Governing Social Media? Philosophical and Technical Considerations of Democratic Social Media. First Monday, 25(12). Publisher Version (Open Access)

- Engelmann, S., & Grossklags, J. (2019) Setting the Stage: Towards Principles for Reasonable Image Inferences. Workshop on Fairness in User Modeling, Adaptation and Personalization (FairUMAP), 27th Conference on User Modeling, Adaptation and Personalization (ACM UMAP). Author Version Publisher Version

- Engelmann, S., Chen, M., Fischer, F., & Kao, C. & Grossklags, J. (2019) Clear Sanctions, Vague Rewards: How China's Social Credit System Currently Defines “Good” and “Bad” Behavior. Proceedings of the 2nd ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT), Atlanta, Georgia, January 2019. Author Version Publisher Version

- Engelmann, S., Grossklags, J., & Papakyriakopoulos, O. (2018) A Democracy called Facebook? Participation as a Privacy Strategy on Social Media. Proceedings of the Annual Privacy Forum 2018. Lecture Notes in Computer Sciences (LNCS). Full paper. Author Version Publisher Version

Conference Talks & Panels

2023

- Talk on How Cross-Cultural Perspectives on the Ethics of Facial Analysis AI Can Inform EU Policymaking at the European Workshop on Algorithmic Fairness (EWAF), Winterthur, Switzerland.

- Talk on How People Ethically Evaluate Facial Analysis AI: A Cross-Cultural Study in Japan, Argentina, Kenya, and the United States at the Many Worlds of AI Conference, Cambridge, United Kingdom.

2022

- Lightning talk on Narratives in the Chinese Social Credit System at the ACM/AAAI Conference on Artificial Intelligence, Ethics and Society (AIES) 2022, Oxford, United Kingdom.

- Lightning talk on Formalistic Self-Concepts & Social Media Profiling at the ACM/AAAI Conference on Artificial Intelligence, Ethics and Society (AIES) 2022, Oxford, United Kingdom.

- Talk on What People Think AI Should Infer from Faces at the ACM Conference on Fairness, Accountability, and Transparency (FAccT) 2022, Seoul, South Korea.

- Talk on The Ethics of Intervening Against Hate Speech: A Case for Automated Counterspeech at the Ethics, Society, & Technology Unconference 2022, Stanford University, USA.

2021

- Talk On the Epistemic Soundness of AI Personality Inferences based on Visual Data at CEPE/International Association of Computing and Philosophy, Joint Conference 2021: The Philosophy and Ethics of Artificial Intelligence. Virtual conference.

- Talk on Blacklists and Redlists in the Chinese Social Credit System: Diversity, Flexibility, and Comprehensiveness at the 4th AAAI/ACM Conference on AI, Ethics, and Society (AIES). Virtual conference.

- Talk on What People Think AI Should Infer from Faces at the Ethics and Technology Lecture Series of the Munich Center for Technology in Society, Munich, Germany.

2020

- Speaker at the Tenth International Conference on Complex Systems on AI, Risk Emergence & Zero TrustNetworks, Boston, USA.

2019

- Talk on Ethical Implications of Image-based User Modeling at FairUMAP, UMAP 2019, Larnaca, Cyprus.

- Talk on Reasonable Image Inferences at Metaethics of AI & Self-learning Robots (Workshop), Venice International University & Ludwig Maximilian University of Munich, Venice, Italy.

- Talk on Clear Sanctions, Vague Rewards: How the Chinese Social Credit System Currently Defines "Good" and "Bad" Behavior at ACM Conference on Fairness, Accountability, and Transparency (FAT*), Atlanta, USA.

2018

- Talk on Notions of Good and Bad in the Chinese Social Credit System at Forum Privatheit, Munich, Germany.

- Talk on Facebook's Power to Generate Data Narratives at Amsterdam Privacy Conference, Amsterdam, The Netherlands.

- Talk on Pitfalls of Social Media Democracy at Amsterdam Privacy Conference, Amsterdam, The Netherlands.

- Talk on Facebook Democracy? at Annual Privacy Forum, Barcelona, Spain.

Media

"What would it take to turn Facebook into a democracy?". Blog entry for blog Justice Everywhere together with Prof. Lisa Herzog. (March 4, 2019)

"China's Social Credit System won't tell you what's right". TechCrunch reports on our paper "Clear Sanctions, Vague Rewards: How China's Social Credit System Currently Defines "Good" and "Bad" Behavior. (January 28, 2019)

Awards

Weizenbaum Student Prize 2018 (October 2018). Awarded for his Master Thesis on Facebook's capacity to generate data narratives.

- Work presented at https://2018.fiffkon.de/

- More information: www.fiff.de/studienpreis

Teaching

Seminars

- The Value of Privacy

- Trust in Automated Decision-Making

- Transparency of Algorithmic Systems

Lecture

- IT and Society

Contact

Email: severin.engelmann(at)tum.de

Phone: +49 (89) 289 - 17746

Twitter: https://twitter.com/SeverinEngelma1