News

New Article Published in Information Systems Frontiers: The Present and Future of Accountability for AI Systems: A Bibliometric Analysis

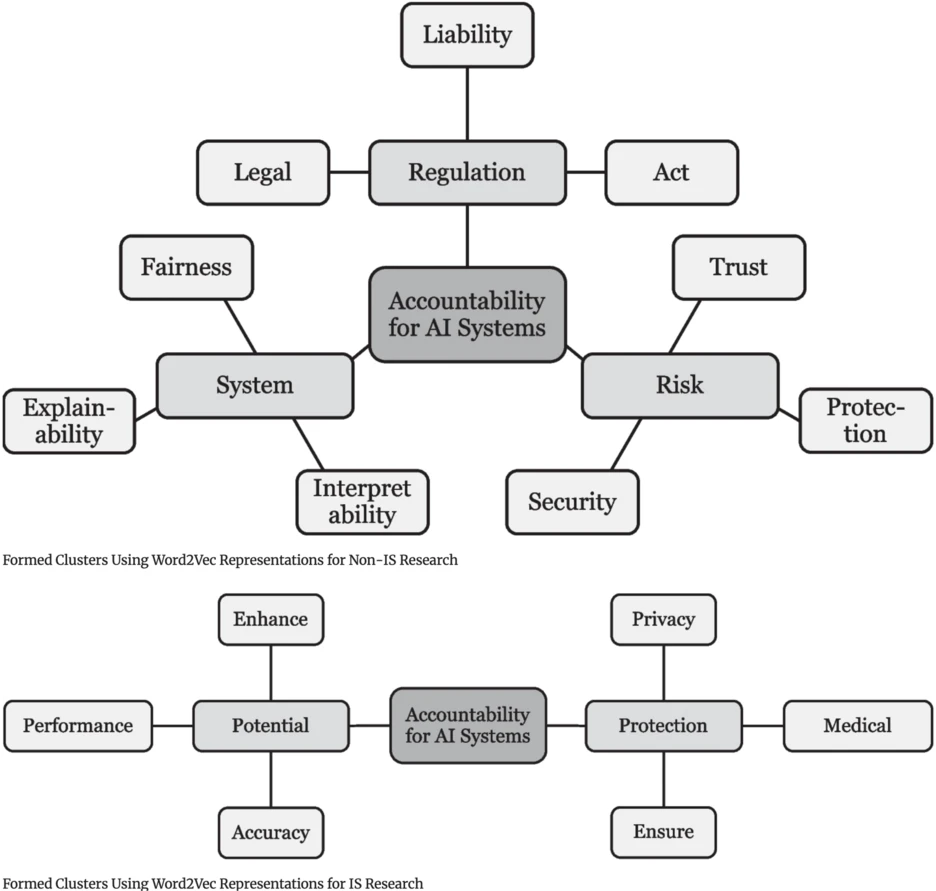

As part of our long-lasting and successful collaboration with the team of Alexander Benlian and the TU Darmstadt, we just published an article in the journal Information Systems Frontiers. In our article, we shed light on the contemporary status of AI accountability research by analyzing over 5000 research articles through a bibliometric analysis. Our findings inform future research with concrete research avenues to pursue.

Abstract:

Artificial intelligence (AI) systems, particularly generative AI systems, present numerous opportunities for organizations and society. As AI systems become more powerful, ensuring their safe and ethical use necessitates accountability, requiring actors to explain and justify any unintended behavior and outcomes. Recognizing the significance of accountability for AI systems, research from various research disciplines, including information systems (IS), has started investigating the topic. However, accountability for AI systems appears ambiguous across multiple research disciplines. Therefore, we conduct a bibliometric analysis with 5,809 publications to aggregate and synthesize existing research to better understand accountability for AI systems. Our analysis distinguishes IS research, defined by the Web of Science “Computer Science, Information Systems” category, from related non-IS disciplines. This differentiation highlights IS research’s unique socio-technical contribution while ensuring and integrating insights from across the broader academic landscape on accountability for AI systems. Building on these findings, we derive research propositions to lead future research on accountability for AI systems. Finally, we apply these research propositions to the context of generative AI systems and derive a research agenda to guide future research on this emerging topic.

Please find and cite the article here: https://doi.org/10.1007/s10796-025-10636-9